Most of eCommerce websites use layered (aka faceted) navigation system which allows the store users to browse product listings using predefined filters or sorting options, which is extremely valuable from a user experience point of view. This kind of navigation system usually adds URL parameters (aka query strings) to the URL when some of the filters are applied and the parameters come after a question mark (?).

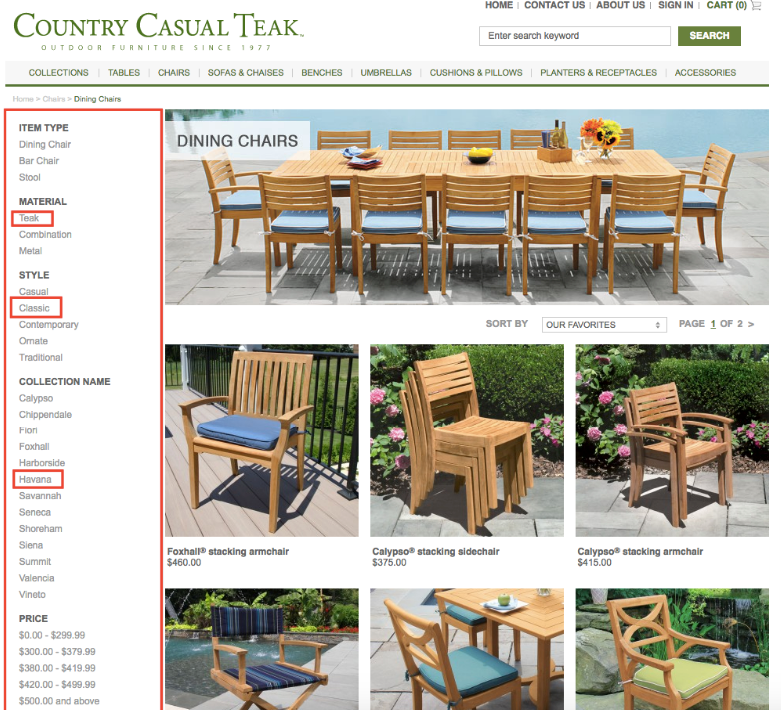

As an example of the layered navigation systems adding URL parameters let’s look at an outdoor furniture manufacturer and retailer Country Casual Teak Magento eCommerce store.

Country Casual Teak Magento eCommerce store

Taking SEO into account

Say we want to buy some outdoor dining chairs for backyard. We would navigate to the dining chairs category page.

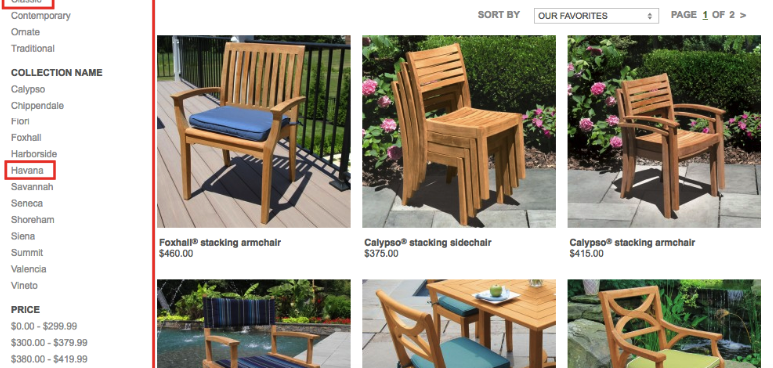

The typical products category page, as in this example, would be like in the image to the left.

So when a user wants to filter the offer of all the available chairs and has already in mind what he/she wants, they would use the layered navigation system, which in this case (and oftentimes) is on the left of the page view as seen in the image above.

In this example, let’s say that the user wants a teak material classic style chair from the Fiori collection, hence the respective values are selected in the filter.

In a typical Magento layered navigation system, the URL adds the filter parameters and, for our example, would look like this:

www.store.com/chairs/dining-chairs?collection_name=Fiori&material=Teak&style=Classic

When filters are applied, the page’s content stays the same or very similar but might be differently organized or filtered. This is one of the most common SEO pitfalls for eCommerce websites, since the layered navigation systems, if not implemented and managed correctly, can create problems for Search Engines to read and index the pages correctly. So, in order to help search engines and for them to return the favor, certain actions must be taken.

There are two main SEO issues with URL parameters: Duplicate content and Keyword cannibalisation:

Duplicate content:

If a filter or sorting option is applied and the URL changes by adding a URL parameter, but the majority of the content of the page does not change, the meta titles and descriptions of the page do not change, causes Search Engines to see the page as a duplicate page of the default product category’s page, IF THE SEARCH ENGINE IS NOT INFORMED ABOUT IT. And duplicate content issues have considerable negative impact on the PageRank and link juice.

Keyword cannibalism:

This issue happens when multiple pages of an eCommerce site target similar or identical keywords, hence the pages compete with one another for the same spot of a particular search query and the Search Engines act as the judge who decides which page will show in the SERP. Ideally, the ones who decide which page shows should be us, not the Search Engine.

Keyword cannibalism can happen due to similar or identical page titles, meta descriptions, and headings and hence the performance of both of these pages goes down due to the competition from one another.

To solve the SEO issues created by layered navigation systems, there are three main ways to do this: canonical tags + Meta robots tags; URL parameters specification in Search Console; and Crawler restrictions using robots.txt.

Canonical tags + Meta (follow + noindex) robots tags

If you are in the process of building an eCommerce store on Magento or are considering adding faceted navigation system to your existing store, hence do not yet have the problem of having unwanted filtered pages in google’s index, then Canonical meta tags will do the trick.

The canonical tag implementation on the category pages and canonicalization of the filtered and sorted pages will not only tell crawler bots that the canonicalized pages are variants of the main category page, but will also pass link value from the filtered pages to the primary version of the page. Canonical meta tag is an aspect which should be present on an eCommerce store even if it does not have a faceted navigation system, hence make sure your canonical tags are up and running.

However, for an eCommerce store that already has issues with faceted navigation i.e. hundreds or thousands of duplicate or near duplicate and low quality pages in Google’s index, adding canonical tags might not be enough for a good solution since Google tends to take very long time of recognizing new canonical tags and adjusting its index archive. In addition, the canonical tags do not meet Google’s requirements for manual URL removal from the index (using the Search Console’s Remove URLs tool).

In this case, in addition to the canonical tags, the meta robots (or x robots) tags need to be introduced. More precisely, the filtered pages which are adding URL parameters need to have a “noindex, follow” robots tags that look like this:

<meta name=”robots” content=”NOINDEX,FOLLOW” />

This tag will make sure that Search Engine crawlers will not index the filtered or sorted pages but will continue to follow the links and pass value to other pages. Additionally, having the Noindex robots tag on pages that have already been indexed on Google, qualify to be manually removed from the Google’s index via Search Console’s “Remove URLs” tool, which can offer a quick fix to the main problem. Note that if you are going to use the meta robots tags, do not use Noindex, Nofollow tag for the filtered/sorted pages since this will leak PageRank.

URL parameters specification in Search Console

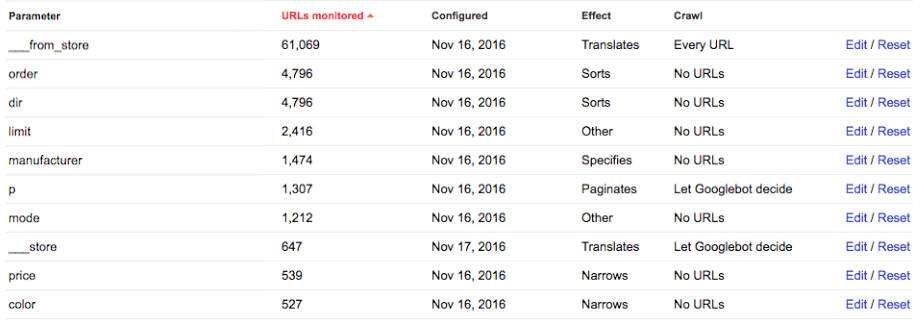

Google’s Search Console has also a dynamic URL parameters handling tool (under the “Crawl” section) which was introduced with the purpose of allowing webmasters to instruct Google’s crawlers of how to handle each dynamic URL parameter it encounters.

Webmasters can list all the parameters based on the store architecture and specify what effect the specific parameter has (e.g. sorts, paginates, narrows, specifies content) and how Googlebot should crawl URLs with the respective parameter (e.g. crawl all pages that include this parameter or do not crawl any page that includes this parameter).

Google Search Console URL parameters handling tool

Even though the dynamic URL parameter handling tool has become more effective since it was first introduced by Google, to rely solely on this would not be my personal suggestion for dealing with faceted navigation systems, though it’s worth using it even alongside robots.txt and meta robots tags + canonicals.

Do NOT Use Robots.txt to Solve Overindexation

Supposedly, the easiest of the solutions is to disallow Search Engines crawling of the pages that include URL parameters added by the faceted navigation systems using the Robots.txt file. To disallow crawling of all URL parameters which are added after the question mark (?) a single line in robots.txt file would do: Disallow: /*?

To disallow crawling of specific URL parameters, each respective parameter would need to be added in the robots.txt disallow list. So, for example, to disallow crawling of product listing sorting option parameters (dir=…&order=…), the following two line would be added in the robots.txt file:

Disallow: *?* dir

Disallow: *?* order

This might be the case when some of the parameters have been dealt with different means, for example, using rel=prev/next for pagination.

However, even though using the robots.txt file to deal with the duplicate content and keyword cannibalization issues is suggested as a good option in many SEO blog posts, it’s actually not good at all. Using the robots.txt is not a good option in terms of SEO due to the fact that Search Engines without accessing the filtered and sorted pages cannot pass the link value to pages which would be eligible for crawling and indexing, hence it can be seen as lost potential PageRank.

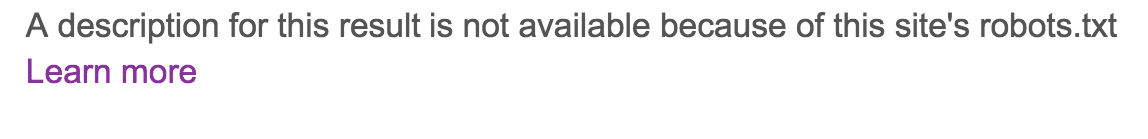

To add to that, disallowing crawling of a certain URL parameter does not disallow this URL to be included in the index, hence, this will not solve the over-indexation problem and the URLs will still be eligible to show up in Google search results, but with a descriptions under the URL like this:

Google Search Result for robots.txt disallowed URL.

So, How to Poperly Implement Layered Navigation?

If you’re building an eCommerce site with a layered navigation system, use the canonical meta tag elements and in addition also add the URL parameters handling instructions in Google’s Search Console.

If you have to deal with an over-indexation issue of the layered navigation pages, add the meta noindex, follow tags to the filtered/sorted pages with dynamic URL parameters. This can be done writing a script with rules for the layered navigation system URLs to add the correct tag. In addition, use the “Remove URLs” tool in Search Console to adjust the Google’s index archive.

And do not use the robots.txt file to deal with over indexation problem as this will only restrict crawlers from crawling the page, not indexing.

If you need help dealing with Magento SEO issues, check out our services or shoot us a message at [email protected].

Share on: