Billions of questions are answered by AI engines every single day. ChatGPT, Perplexity, Claude, Gemini (large language models – LLMs) are quickly becoming the first place people turn for answers that they draw from articles, blogs, product pages, and forums to generate personal and authoritative responses.

But that doesn’t mean your site is always linked, and your brand is always part of those answers. If it’s not, it’s invisible. And your content might be impacting those responses without you even knowing it.

Tracking how your brand shows up in AI-generated responses is a growing priority for digital marketers. With SEO, it was about ranking on page one, but now it’s become about being present (and the way of being present) in the answer itself.

Our guide will show you how to track your brand’s visibility inside today’s leading AI engines. Learn how different tools generate responses, how to test prompts your audience might use, and how to interpret what shows up and what doesn’t. With the right approach, tracking AI visibility will uncover new profitable opportunities.

Key takeaways

- LLM visibility means your brand appears in AI-generated answers, whether through citations, summaries, or paraphrased content, and often without attribution.

- Prompt testing helps you measure your presence by simulating real user queries across tools like ChatGPT, Perplexity, Claude, Gemini, and others.

- Structured, clearly written content increases your chances of inclusion, especially when using FAQs, comparisons, schema markup, and consistent naming.

- Tracking over time reveals patterns, helping you refine content and stay visible.

What is LLM visibility?

LLM visibility is how your brand, content, or products show up in the answers that AI tools generate. It can be citations or direct quotes, but also summaries, comparisons, recommendations, or explanations that shape what users see and believe.

There are different levels of visibility across these tools. Sometimes, your brand is cited directly with a source link, or your product or a snippet of your content is paraphrased without attribution. And in many cases, your competitors might be included while you’re left out entirely.

Tracking LLM visibility means looking at:

- Presence – are you mentioned at all?

- Position – are you the first result or buried in a list?

- Format – is the reference a direct quote, citation, paraphrase, or something else?

- Context – is your brand portrayed accurately, or are outdated or misleading descriptions being pulled in?

These signals, first and foremost, tell you if you’re part of the conversation. They also show how AI frames your brand in relation to the topic, the user’s intent, and your competitors.

Unlike traditional SEO, where page rankings are easy to benchmark, LLM visibility requires a more nuanced, hands-on approach. But it also opens the door to new insights about your content’s clarity and authority.

How AI models decide what to show

When you ask an AI tool a question, instead of pulling the answer from a single source, it builds one by scanning, combining, and rewriting information based on what it’s been trained on or what it can retrieve in real time. The sources behind those answers determine what gets included and how your brand is presented, if at all.

🚀 Quick takeaway

LLMs combine info from training data, live results, structured content, and repeat mentions across the web to pull answers.

Here’s what influences that process:

- Pre-trained data

Some models (like ChatGPT in default mode or Claude without search enabled) rely on datasets collected during their last training cycle. These are snapshots of the internet taken months or even years ago: a mix of public websites, articles, forums, product listings, and general web content. If your content wasn’t widely available or well-structured at the time, it likely won’t be referenced.

- Real-time search results

With browsing enabled, tools like Perplexity, Gemini, and ChatGPT can pull in live results. Their responses often include citations and reflect what’s currently published on the web. Your content needs to be recent and structured to make it easy for the AI to extract and reuse.

- Structured data and authority signals

Content that includes schema markup, labeled sections, clear titles, or FAQs is easier for LLMs to parse and reuse. Structured content increases the chance your information will be used in answers, even without attribution. Domain authority, link profiles, and how often others reference your content also factor in. That’s why the same directories and marketplaces keep getting mentioned.

- Context reinforcement

If your brand is consistently mentioned across multiple sources (third-party sites, forums, comparison articles), especially about specific topics, AI tools are more likely to include it. Even if the mention isn’t on your own site, it contributes to your presence in AI-generated answers.

Building a prompt testing strategy

Before you can measure how often your brand appears in AI-generated answers, you need to think like your audience. They’re probably not searching for your brand name but asking questions and looking for recommendations. Your goal here is to simulate those queries and see how AI tools respond. Here’s how to approach it!

Start with real-world questions

Consider what your customers might type into an AI engine when trying to solve a problem you/your products help with. Avoid branded queries unless you’re testing for navigational intent. What matters most is how you appear in the generic, high-intent prompts your competitors are also trying to win. Prompt examples:

- Informational prompts, e.g., “What’s the best CRM for small B2B teams?”

- Comparative prompts, e.g., “HubSpot vs Salesforce for growing startups”

- Category-based prompts, e.g., “Top Shopify agencies for fashion brands”

- Niche use cases, e.g., “Eco-friendly packaging suppliers in Canada”

Create your prompt bank

Build a simple spreadsheet or doc where you store your full list of prompts, the intent behind each (informational, commercial, etc.), and the ideal outcome (brand mention, product positioning, etc.). Aim for 10–20 prompts to start, covering different funnel stages and customer segments. You can expand later once you see which patterns are worth tracking more closely.

Account for different phrasing

LLMs respond differently depending on how a question is asked, and small changes can shift which sources get pulled and how your brand is framed. Test variations like:

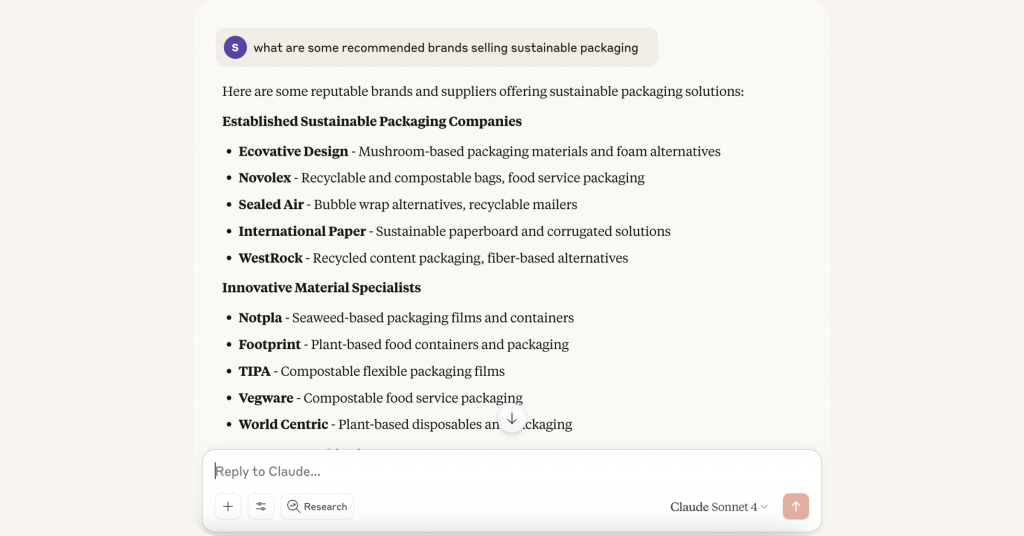

- “What are the best…”

- “Who are some recommended…”

- “Can you compare…”

- “What company offers…”

AI platforms to track visibility

Not all AI engines work the same way, so understanding how each one behaves helps you decide what to track and how to interpret the results.

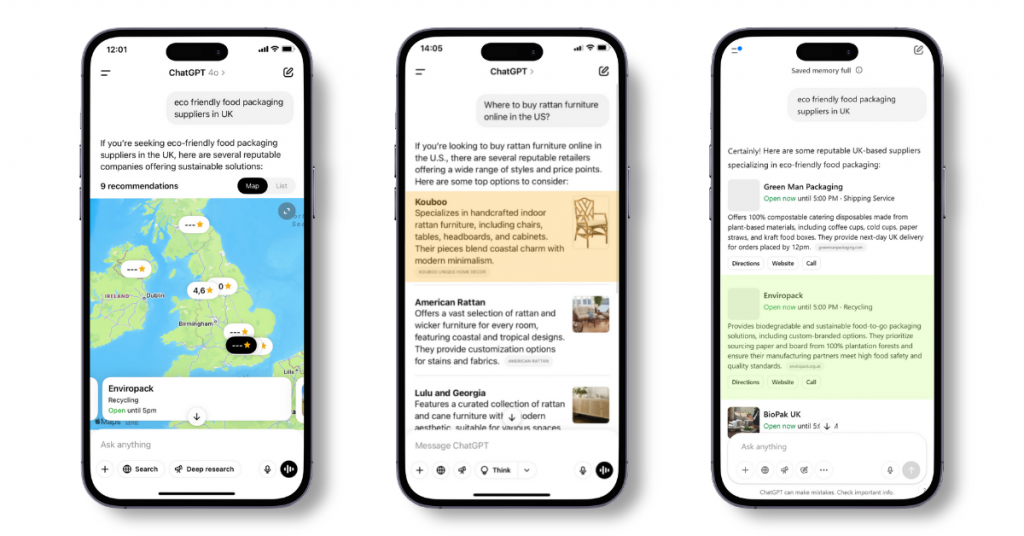

ChatGPT (OpenAI)

- Free version (GPT-3.5) runs on older, static data, no browsing.

- Paid version (GPT-4) may have browsing turned on, depending on your settings.

- Citations are inconsistent – sometimes you’ll get links, other times only a summary.

- Useful for testing how well your brand is understood and paraphrased.

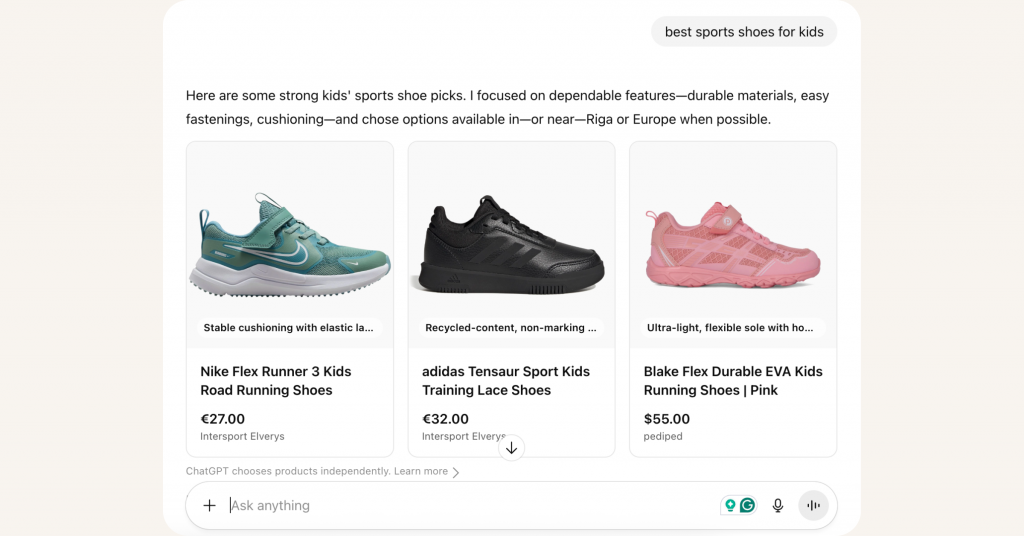

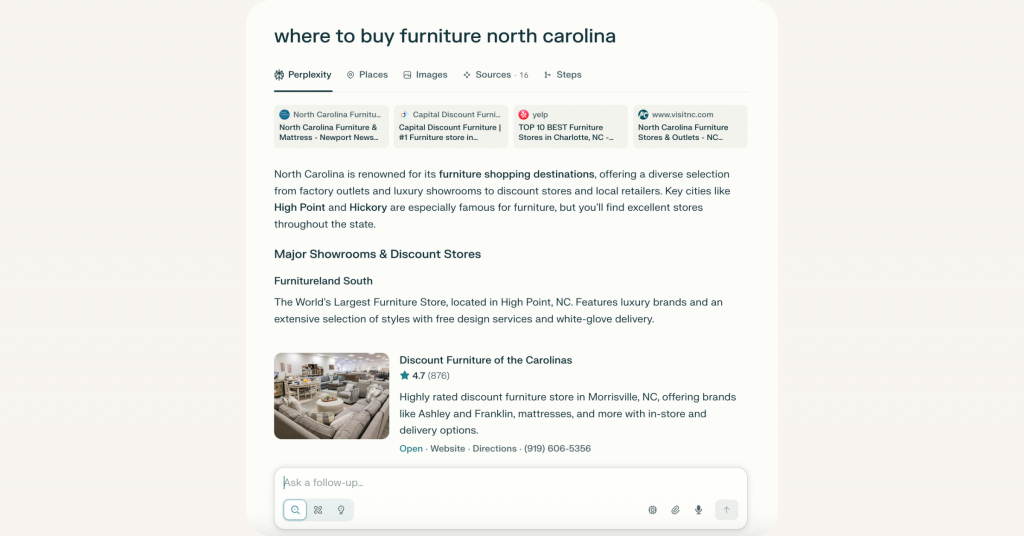

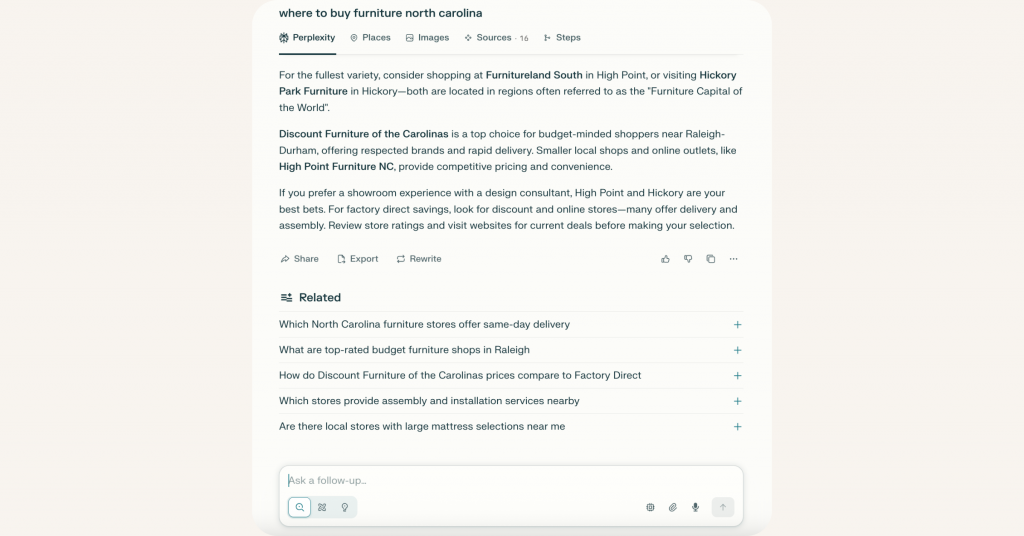

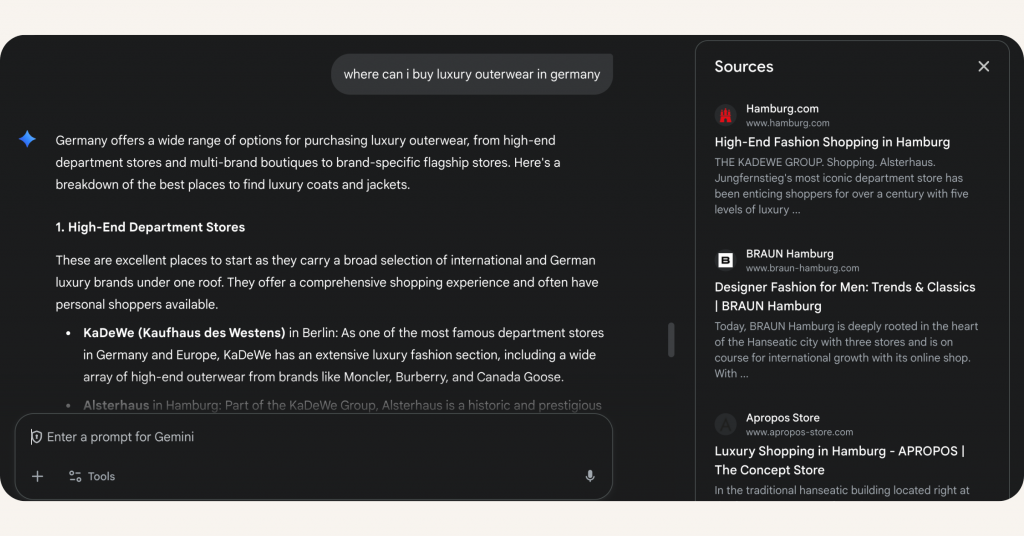

Perplexity

- Uses live search results by default.

- Citations are visible and clickable for nearly every answer.

- Great for identifying exactly which pages are influencing the AI’s output.

- Follow-up questions test brand recall and consistency over multiple steps.

Claude (Anthropic)

- Strong at giving well-organized answers, often with clear sourcing.

- Tends to cite sources for factual or comparison-based prompts.

- A good option if your site includes how-to content, guides, or research-driven material.

Gemini (Google)

- Pulls from Google Search, YouTube, and other live content.

- Prioritizes sources that already perform well in Google’s ecosystem.

- Structured content and schema are especially helpful here.

Microsoft Copilot (Bing)

- Built on OpenAI models, integrated with Bing.

- Uses live web content with hoverable citations.

- Especially strong in product-related queries, local business searches, and technical FAQs.

DeepSeek

- Rising open-source model with strengths in technical and multilingual prompts.

- Some interfaces show citations, but behavior can vary.

- Useful if your brand targets academic, global, or niche expert audiences.

Logging and analyzing LMM visibility

Once you’ve tested your prompts, it’s time to collect what shows up. You’ll need to be able to spot patterns, find weaknesses, and understand how your content performs across different models. A simple spreadsheet or Notion board works fine as long as it’s consistent.

Why this matters

LLMs don’t return the same result every time. Even with the same prompt, you might show up once and disappear the next. Logging multiple sessions across tools gives you a clearer signal about frequency and positioning over time.

🚀 Quick takeaway

One prompt is never enough – run the same query multiple times across tools to spot trends.

What to track for each prompt

- Prompt tested

- AI tool used

- Date tested (LLMs change constantly, so timestamp everything)

- Brand mentioned? Yes/No. If yes, how is it phrased?

- Type of visibility – citation, paraphrase, brand name only, other

- Competitors mentioned

- Source links to your pages

- Notes – was your messaging accurate? Anything misrepresented or outdated?

Optional extras

- Prominence score/how high was your brand featured in the response?

- Sentiment and tone of the mention

- Consistency when testing the same prompt multiple times

Tools to track brand mentions in AI answers

Tracking visibility manually is a solid way to start. But if you manage many prompts while tracking competitors, etc., you’ll quickly outgrow spreadsheets. Here’s a look at some of the leading tracking tool options and what teams they suit best.

Remember! Even with the right tool, you’ll still need a strong testing process. But these platforms will save hours of manual work and help you track real change over time.

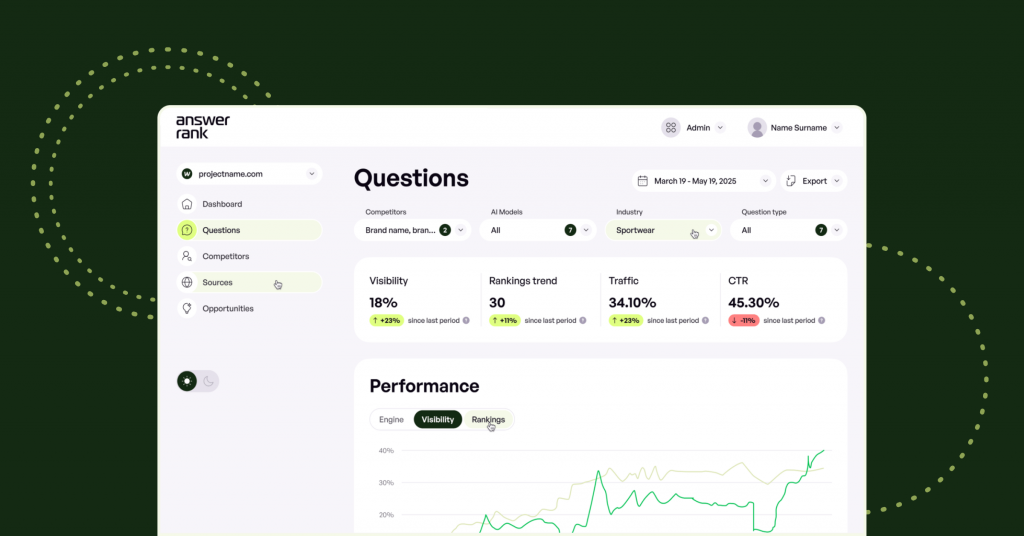

AnswerRank

Best for: SEO leads, content strategists, performance marketers

Team size: Medium to large agencies or in-house SEO teams

Purpose-built for LLM visibility. Lets you test real prompts and track how often your brand or competitors show up. Includes dashboards for prompt performance, position tracking, and trend overviews. Helpful in identifying gaps and making decisions across multiple content teams.

LLMrefs

Best for: Technical marketers and SEO analysts

Team size: Mid-market to enterprise

Covers a wide range of AI tools, including ChatGPT, Claude, Gemini, Perplexity, and Grok. Tracks your brand’s presence and also highlights model biases. Includes an LLMrefs Score – a proprietary metric that helps you benchmark visibility over time.

Scrunch AI

Best for: Marketing teams focused on brand consistency and messaging

Team size: Agencies and growing DTC brands

Positions itself as a brand presence monitor in AI. Tracks how your company is described across tools, with a focus on sentiment and accuracy. Suitable for brands with strict messaging guidelines or ongoing PR/communications monitoring.

Peec AI

Best for: SMBs and small marketing teams

Team size: Startups, boutique agencies, consultants

Simple and user-friendly, with strong coverage across major AI engines. Tracks mentions, sentiment, scoring, and citation patterns. A good entry-level tool for teams that want better visibility without dealing with steep learning curves.

Profound AI

Best for: Enterprise SEO departments and data-heavy organizations

Team size: Enterprise

Offers deep customization, high-volume prompt testing, and integration into existing analytics workflows. Ideal for teams with internal analysts or BI resources who need granular tracking across large sets of prompts.

Semrush (AI Visibility Suite)

Best for: Existing Semrush users expanding into AEO

Team size: All

Semrush now includes AI visibility tracking as part of its broader SEO toolkit. It’s useful if you already rely on Semrush for keyword tracking, competitor benchmarking, or content audits and want to add AI response tracking to your workflow.

Patterns to look for in LMM tracking results

Once you’ve collected enough data from your prompt testing, the goal is to understand why your brand does or doesn’t show up, and what to do next. Watch for the following patterns!

Consistent competitors

If the same 2–3 brands appear across multiple prompts, tools, and sessions, that’s a signal. They’ve likely published the kind of content LLMs prefer or been cited enough across the web to earn a default spot. Study what they’re doing: where they’re mentioned, what content formats they use, how they structure information, and which domains they appear on. You’ll want to do something similar.

🚀 Quick takeaway

If your competitors keep showing up and you don’t, they’ve likely nailed the content formats and source placements LLMs prefer.

Content types that get reused

Are the AI tools pulling from blog posts, product pages, comparison charts, directories, or community forums most? If your site isn’t being cited but others are, check how those formats differ from yours. In many cases, structured content like FAQs, “best of” lists, and definition-based pages is easier for LLMs to parse and reuse.

Platform-specific behavior

ChatGPT might paraphrase your blog post without citing it. Perplexity might show a competitor’s site five times in a row. Claude might prefer cleaner documentation or research-heavy articles. Each tool has its own tendencies, and tracking across platforms helps you understand what kind of content performs where.

Incorrect or outdated information

Sometimes your brand does appear, but AI uses old messaging, legacy/sold-out product names, or inaccurate positioning, often lifted from third-party sites or cached pages that are still referenced. Ensure visibility and quality control overlap.

Paraphrase without credit

You might find that your messaging or product descriptions are being summarized or reworded without a link or mention. The good news is that the content is working, but not in a way that builds brand equity. This is common with unstructured blog content or generalist explainer pages.

To spot these patterns clearly, it helps to:

- Re-run the same prompt 3–5 times across tools

- Log every unique mention or phrasing

- Track what source types show up most often

- Compare your content format and wording with the pages that do get cited.

Soon enough, you’ll move from reactive “We showed up once!” to strategic “We’re being positioned this way across three tools, but competitor X has a stronger presence because they’re ranking in source Y.”

Read some of our client success stories after answer engine optimization (AEO):

- AEO Makes Novatours #1 Travel Agency in AI Search

- How AEO Helped Enviropack Become a Top AI Pick for Sustainable Packaging

- AI Search Optimization Helps Kouboo Break Into the Top 3 AI Answers

How to improve your LLM visibility

Once you know how AI tools are using your content, you can start adjusting your strategy. Here’s how to improve your chances of being included in the answers that matter:

- Strengthen clarity because AI tools often skip over vague descriptions. Instead of writing “the best hiking gear,” say “TrailFlex waterproof hiking boots for wet mountain trails.” Name products, categories, use cases, and locations clearly, so that AI doesn’t have to guess.

- Target known citation sources, as LLMs frequently reference content from trusted aggregators, directories, media sites, and high-authority blogs. If your competitors are getting cited, find a way into those pages or create something similar that earns backlinks and visibility.

- Use structured formats – content in clean, labeled sections (like FAQs, comparison tables) is more likely to be used in an answer; the same goes for H2s/H3s, bullets, and schema markup to make your content easier to interpret.

- Monitor and refresh outdated content if AI tools are pulling from legacy pages or misrepresenting your current offering. Set up regular reviews of key URLs, especially those tied to high-intent queries or competitive categories.

- Cover specific queries, not just keywords, and don’t skip the real-world questions people ask (“best email marketing software” → “what’s better for small teams: Mailchimp or Klaviyo?”) The more directly your content answers those kinds of prompts, the more likely it is to show up in AI-generated responses.

Conclusion

Expect LLM visibility to shift as sources get refreshed and prompts change. One week, your brand might appear in 7 out of 10 tests, while the next, you’re out entirely. That’s normal. Tracking regularly matters more than chasing quick wins.

If you’re running SEO or content strategy, you need to treat AI-generated answers the same way you treat SERPs: as a space where your brand needs to compete, using clarity, presence, and consistency.

Start by tracking how your brand shows up today by running prompts that reflect real customer questions. Log the data and spot the gaps, then make your content easier to find and use and harder to ignore.

AEO and AI visibility work together, so getting ahead of it now puts you in a stronger position as AI continues to shape how customers make decisions.

Need help finding out how visible your brand really is? Our team offers a free AEO audit that highlights where you are and where you can improve. We also build AI solutions for eCommerce brands, anything you can imagine and needs fixing – let’s work on something great together!

Share on: