The problem we solve

Evaluating task submissions—especially when they involve multiple components and nuanced criteria—can be slow, inconsistent, and manually exhausting. Without a structured system, grading often becomes subjective and difficult to scale.

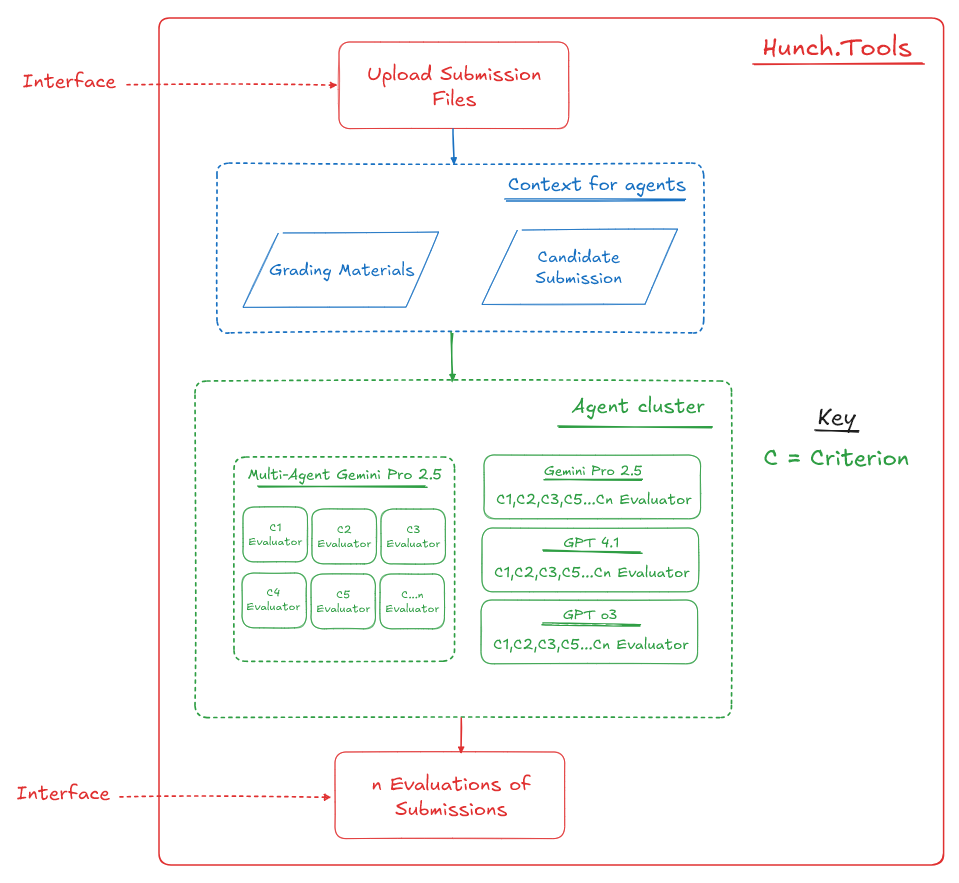

To bring structure, speed, and consistency to this process, we built a custom grading engine using Hunch Tools as the foundation. Third-party hiring tools didn’t quite offer the flexibility or depth we needed, so we took matters into our own hands.

How it works

Context for LLM

- Grading materials – Rubric, benchmark examples, and the task document

- Submission files – Slideshow and video transcript

Processing

The submission enters a multi-step evaluation pipeline in Hunch Tools, powered by a blend of eight AI agents. Here’s how it’s structured:

- Multi-Agent Evaluator – Built with Gemini Pro 2.5, this system uses 5 agents to independently score each criterion. Their scores are then aggregated to deliver a balanced, transparent evaluation

- Single-Agent Evaluators – 3 standalone LLMs (Gemini Pro 2.5, GPT 4.1, and GPT-3.5-turbo) assess the full submission holistically. These provide broad, top-level insights to complement the detailed criteria scores

All models run within a single Hunch Tools workflow, making multi-agent orchestration seamless and accessible.

Output

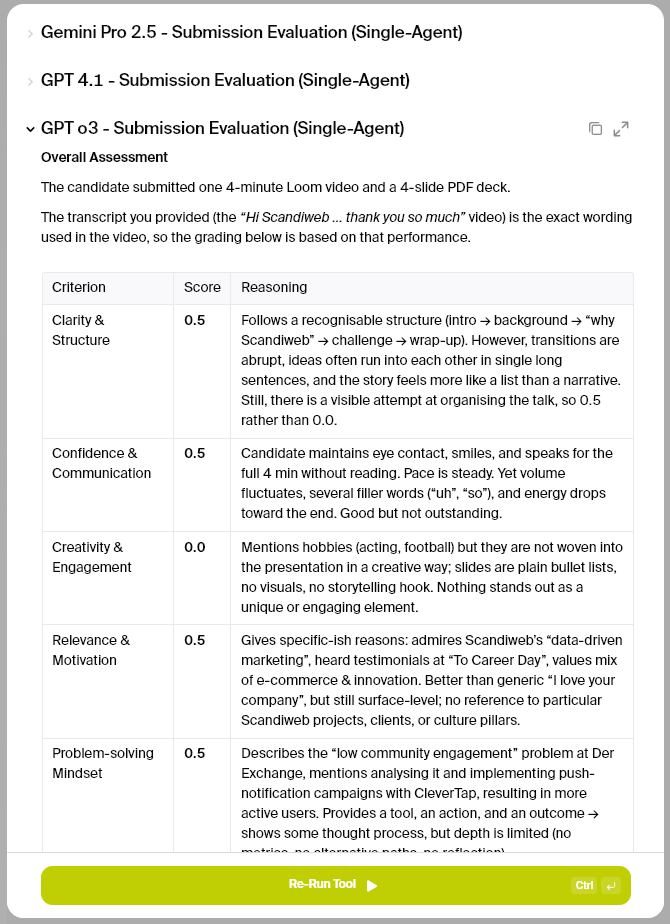

Each submission produces 4 outputs:

- An aggregated, criteria-by-criteria evaluation from the multi-agent system

- 3 complete submission assessments from individual LLMs

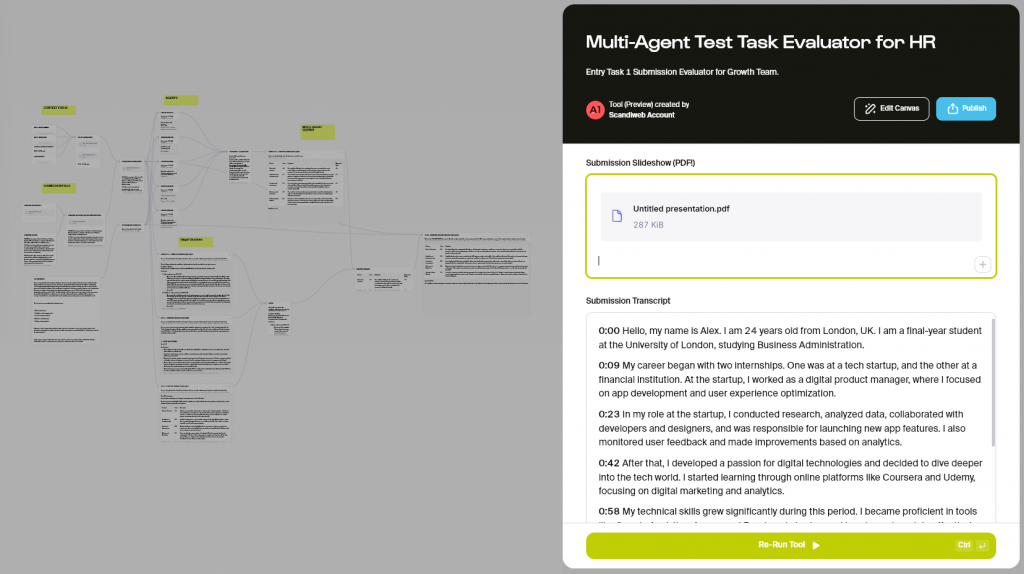

All results are outputted to the canvas interface thanks to the “output” feature, allowing to set certain nodes to be “outputs”. This plays further into the convenience of Hunch, which allows to convert any workflow (canvas) into a “tool”, which is effectively a shareable interface for running the whole workflow in the most accessible manner.

Example walkthrough

Impact

The evaluation process now takes minutes —without sacrificing quality or depth. It ensures fairness, reduces workload, and enables a scalable approach to grading high-volume submissions with ease.

Built with

- Hunch Tools – Workflow engine and logic

- Gemini Pro 2.5 – Criteria-based agent evaluator + holistic scorer

- GPT 4.1 – Holistic evaluator

- GPT-3.5-turbo (“GPT o3”) – Holistic evaluator

Complexity

- Low

Looking to leverage modern AI tools within your company? Get in touch and explore next steps.

Share on: