Increasing stability without increasing costs and making sure it all functions well. Geth node stability & availability is always a work in progress and improvements are always welcome.

Under the impression that 2 separate nodes, one for the blockchain interaction microservice and one for the Oracle, would provide additional security, we operated with such a set-up for a long time. However, this is not the case and it turns out that 2 nodes do not provide any additional safety in our infrastructure.

Instead of 2 nodes, it makes more sense to have 2 running instances under the same load balancer, thus giving us a redundancy — when 1 blockchain node goes down, we will still have 1 working node.

Bringing a full node to life

Investigation into the fastest options for bringing a full node to life produced the following results:

- Full sync from scratch with — fast flag takes around 2–3 days

- Exporting chain data and then re-importing it takes more than 12 hours (safest option since chaindata is packed in a backup file)

- Copying chaindata folder takes around 2–4 hours (fastest option), after that blockchain is already synced and needs to sync only the few last missing blocks

There’s a clear winner. Have chaindata from a fully synced node store in the AWS elastic file storage (EFS) and, when creating a new node, copy the files from EFS to local nvme drive and then just turn on Geth!

Implementing the winner

- Update Terraform to match the new infrastructure (i3.large instance for Geth and load balancer with 2 instances running at the same time), add EFS for backup storage.

- Create deployment plan that will install all software necessary for Geth and copy chaindata from EFS

- Create a backup mechanism that will create chaindata backup on EFS on a weekly basis

- Implement monitoring and health checks for Geth.

Step 4 is a tricky since Geth communicates through POST requests, but the elastic load balancer can only make GET requests — some middleware is needed, such as the node.js application, that can receive ping from the elastic load balancer, ping the Geth node with the correct request, and return correct status code back to the load balancer, so any unhealthy instance can be terminated.

The result

Voilà — a reliable blockchain client, that works, has a quickly & easily spawnable backup and there is no increase in costs!

But, wait! There’s more!

Geth Health Monitor

To help manage node spawning and various issues related to this, a health monitor can be a lifesaver. The primary purpose of the Geth health monitor will be to translate or serve as proxy for health check requests from the elastic load balancer (ELB). This is required because ELB can only send GET request to specific endpoint, but Geth RPC API works exclusively with POST requests, we need middleware that can check Geth and respond back to ELB for health monitors to work.

Additionally since it takes more than an hour to spawn a new node, this health monitor will also serve as an extension to deployment, handling all the time consuming tasks.

These tasks have to be moved to the health monitor because AWS CodeDeploy has a time limit — 1 hour for a task. Currently, a new node is spawned in 55 minutes and then requires even more time to finish syncing. So, currently, we have an issue where node, which is not yet fully functional, is introduced to the network. The health monitor will resolve this issue.

The health monitor should be able to accomplish the following tasks after deployment:

- deregister instance from elastic load balancer, so it don’t take requests and is not terminated on healthcheck,

- copy chaindata from EFS to local storage,

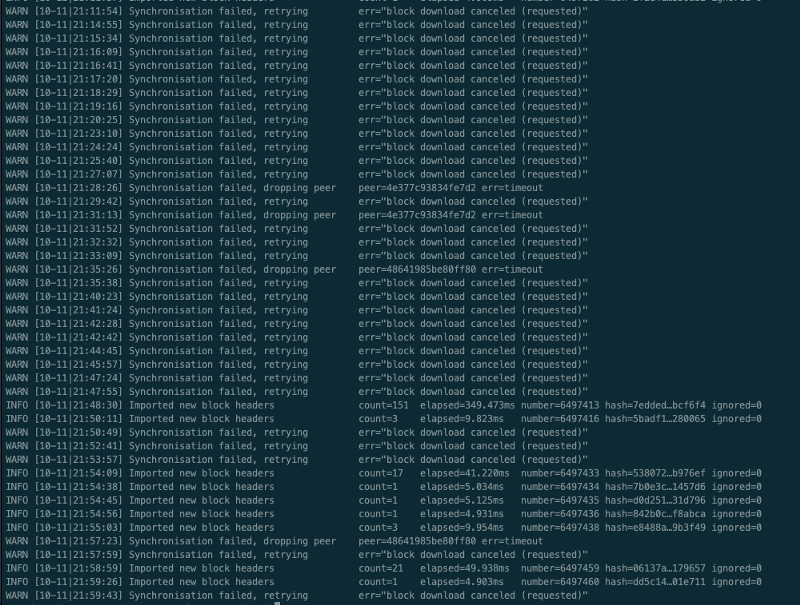

- start supervisor, which will start Geth and start the synchronisation process,

- check when synchronisation is completed and register the instance back to the load balancer, so it can start to take incoming requests.

The health monitor could also have a built in backup functionality, but that is a more complex issue, since health monitors will have to talk to each other somehow, otherwise all nodes will perform backups at the same time. The easiest solution would be a shared database or something similar, where the first node that starts its backup inserts a flag notifying that backup has started and other nodes shouldn’t backup at this moment.

During the backup process, the Geth node needs to be shut down, so it makes sense to reuse registration and deregistration methods from synchronisation for backup archiving as well.

Curious about the blockchain project powered by this? Check out Publica.com or just drop them a line for the best technical blockchain solutions at [email protected]!

Share on: